TikTok Challenges Amnesty’s Claims on Youth Mental Health Risks

In a recent statement, TikTok has firmly contested the findings of a report released by Amnesty International. The report alleges that the app is not only failing to protect vulnerable children but is actively directing them toward harmful content related to depression and suicide.

Amnesty’s research, aptly titled “Dragged into the Rabbit Hole,” brings to light disturbing claims that TikTok’s algorithms expose French children and teenagers engaging with mental health topics to a cycle of distressing content, including self-harm and suicidal themes. This echoes concerns previously highlighted by Yle, where similar patterns were noted among Finnish teens.

As a staggering 90 percent of youth in Finland are active on TikTok, with many spending hours scrolling through its content, the implications of Amnesty’s findings are especially pertinent. Mikaela Remes, a spokesperson for Amnesty, noted, “There’s significant conversation around how TikTok is influencing young people in Finland.” She emphasizes that TikTok is currently not meeting the requirements set out in the EU’s Digital Services Act (DSA), which mandates the protection of minors from algorithmic hazards.

The European Commission has initiated formal proceedings against TikTok to investigate potential violations of the DSA, focusing on the protection of minors, transparency in advertising, access to data for researchers, and the management of risks associated with addictive design and harmful content.

A Closer Look at Content Exposure

Under the DSA, platforms like TikTok are required to identify and mitigate risks to children’s rights. However, Amnesty’s research claims the app has been recommending content related to “suicide challenges.” Lisa Dittmer, an Amnesty International researcher specializing in digital rights for children and young people, stated, “In just a few hours of using TikTok’s ‘For You’ feed, test accounts created by teenagers were exposed to videos that romanticized suicide and depicted individuals expressing a desire to end their lives.”

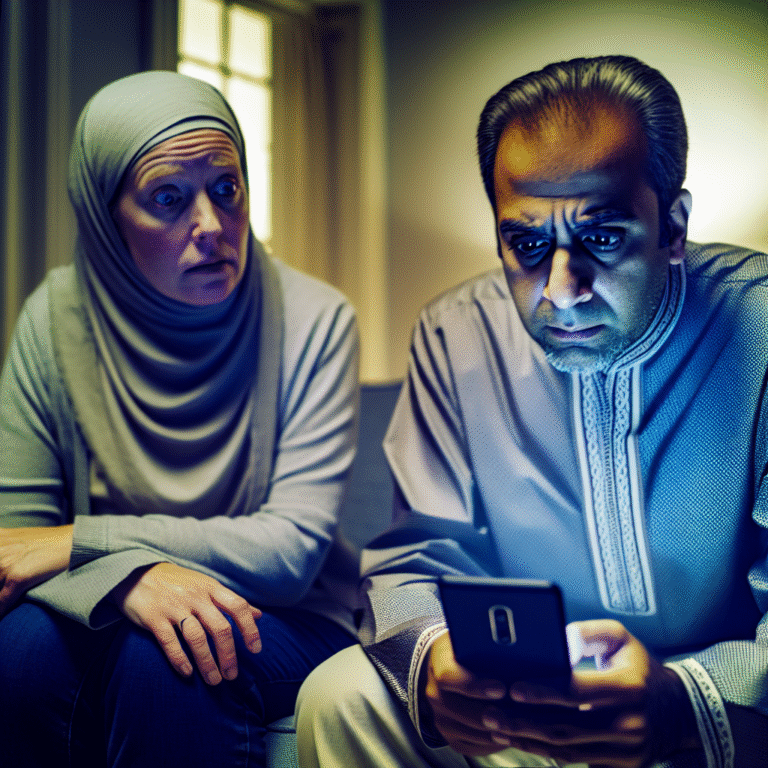

To gather evidence, Amnesty researchers created three TikTok accounts imitating 13-year-olds from France. Initially, before any personalized content preferences were established, these accounts were quickly shown videos centered around themes of sadness and disillusionment.

In their findings, the researchers also identified concerning trends. For example, they noted the emergence of the “lip balm challenge,” which began innocuously but later evolved into a much darker manifestation that encouraged participants to engage in self-harm based on personal feelings of sadness.

Responding to these findings, TikTok, through Finnish communications firm Manifesto, defended its platform, emphasizing the implementation of more than 50 features designed to safeguard the well-being of teens. “We proactively create safe and age-appropriate experiences,” their statement asserted, highlighting that 90 percent of violative videos are removed before they can be viewed.

The company also criticized the methodology of Amnesty’s study, arguing that it didn’t reflect genuine user interactions with the platform. According to TikTok, a significant majority of the content shown to the test accounts did not pertain to self-harm.

As these discussions and investigations unfold, the nexus between social media, mental health, and youth safety remains a critical area for scrutiny. The ongoing dialogue about TikTok’s influence on young people signals that this issue will likely stay in the public eye for some time.